the following text is my personal notes taken with the aim of being a summary about my knowledge and a path to follow in order to build my own tokenizer from scratch, which you can find by clicking here!

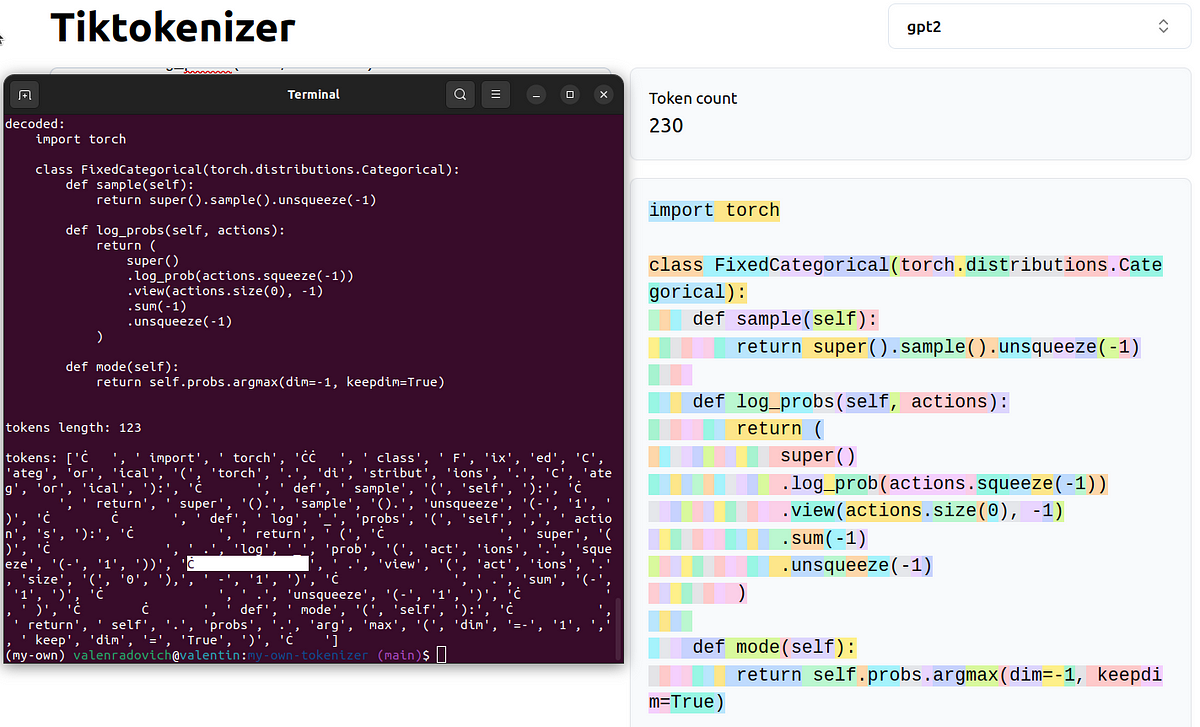

how my tokenizer performs with code tokenization vs gpt-2 tokenizer:

What is a Tokenizer?

A tokenizer is a method of chunking text from a given sentence to encode it into a numerical representation. This process is essential for feeding Language Models (LLMs) with a numerical representation of text, which is something the LLM can understand.

These numbers (representing the text) point to specific ‘coordinates’ in an n-dimensional representation. Each point in that space is a specific representation of a token.

An embedding table is used to encode and decode these tokens.

Tokenizer Vocabulary and Context Size

In GPT-2, the tokenizer vocabulary contains approximately 50,000 tokens (this number increases to about 100,000 in GPT-3.5 and GPT-4). The context size is 1024 tokens, meaning it can pay attention to 1024 tokens at a time.

Challenges with Non-English Languages

One of the main issues with tokenizers and languages was how they tokenized languages other than English. Any sentence in a language with a different alphabet and/or writing system would tokenize into many more tokens than English. This would bloat the text and separate it across too much of the sequence. However, this situation has improved with modern models like GPT-3 or GPT-4.

Side Effects of Tokenization

Several side effects were (or can be) related to tokenization:

- Inability to spell words correctly

- Poor performance in arithmetic

- Better performance with YAML than JSON

- Difficulty with simple string processing

Improvements from GPT-2 to GPT-4

When comparing GPT-2 to GPT-4 tokenizers, we see that the tokenizer has almost doubled the context attention because it’s incredibly better. (We need to consider the context window, the tokens that the model can pay attention to, and the number of tokens generated by the tokenizer.) Why? Simply because the GPT-4 tokenizer uses fewer tokens to represent the same amount of text.

This improvement is particularly noticeable in:

- Japanese and Korean languages

- Code (it’s incredibly better with white spaces)

This leads to a more ‘compressed’ representation of sentences, meaning more context can fit in the attention window.

Byte Pair Encoding (BPE) Algorithm

As we cannot supply the LLM with raw text or bytes (character representation as bytes) because the context length would be useless for attention (any sentence would be too long), we use the Byte Pair Encoding algorithm (BPE):

- All unique characters are initialized to a 1-char long n-gram (initial “token”).

- Successively, the most frequent pair of adjacent characters is related to a new 2-char long n-gram, which will replace the pair of characters in any sentence with this new token generated.

- This workflow is repeated until a vocabulary of ‘x’ size is created. Any new word will be constructed from the final representation (vocabulary of tokens) and the initial set of individual characters.

- All the unique tokens generated from a specific corpus are called the ‘token vocabulary’.

Example from BPE Wikipedia:

aaabdaaabac -> notice pair of 'aa'

ZabdZabac -> pair of 'aa' now is represented as Z

Z=aa

ZYdZYac -> pair of 'ab' is repeated, now represented as Y

Y=ab

Z=aa

XdXac -> pair of 'ZY' is repeated, now represented as X

X=ZY

Y=ab

Z=aa

Tokenizer Architecture

It’s important to note that the tokenizer is completely isolated from the LLM architecture. It has its own training loop and dataset.

To decompose a simple tokenizer, we need:

get_pairs(tokens): Function to obtain the repeated pairs in the dataset (text).merge(tokens, idx_start, pairs): Function to map the top pairs tuple to new ID representation.encode(raw_text): Function to transform raw text into tokens, using the map created withmerge().decode(ids): Function to transform encoded text (IDs in the map we’ve created) to plain text/string.

Special Tokens

Usually, when training the tokenizer with a lot of data, a ‘special token’ is added between each text document. For example, <|endoftext|>. The goal is to ‘explain’ to the tokenizer that a text document ends and a new one starts.

The same happens when fine-tuning the model. All the ‘*-instruct’ models, fine-tuned to handle assistant/chat behavior, have particular special tokens like:

<|im_start|>

<|im_end|>

This is done to denote structure to the model and make it understand when a particular sentence starts and ends.

Regex in GPT-2 and GPT-4 Tokenizers

GPT-2 and GPT-4 tokenizers use specific regex patterns to ensure key conditions when “splitting” text:

GPT2_SPLIT_PATTERN = r"""'(?:[sdmt]|ll|ve|re)| ?\p{L}+| ?\p{N}+| ?[^\s\p{L}\p{N}]+|\s+(?!\S)|\s+"""

GPT4_SPLIT_PATTERN = r"""'(?i:[sdmt]|ll|ve|re)|[^\r\n\p{L}\p{N}]?+\p{L}+|\p{N}{1,3}| ?[^\s\p{L}\p{N}]++[\r\n]*|\s*[\r\n]|\s+(?!\S)|\s+"""

Both tokenizers split on “‘ll”, “‘ve”, and “‘re”, handle numbers, and attach the first space to any word but not the last one. The GPT-4 tokenizer is more comprehensive, working on both lower and upper case, eliminating larger spaces, only capturing numbers up to 3 digits, and more.

Training Tokenizers

For training a non-dummy tokenizer, ‘SentencePiece’ is the most commonly used library in the industry because it can do both training and inference, which is not allowed by tiktoken (OpenAI’s tokenizer). Hugging Face’s tokenizer is also considered good, and is the one I choose.

SentencePiece, developed by Google, is used by LLaMA, Mistral, and other LLMs.

Vocabulary Size Considerations

The choice of vocabulary size (typically between 50k and 100k) is based on experiments. It’s important to consider rare pairs or new tokens, as some newly created tokens could be too rare (appearing only once).

As vocabulary size increases, the model’s embedding table grows, as does the LM head because it has to calculate the logits for more tokens. This leads to increased computational power requirements.

Vocabulary size can be extended during fine-tuning, which would require some minor changes to the model.

thank you for reading!